Unsupervised Reverse Domain Adaptation for Synthetic Medical Images via Adversarial Training

Mahmood F, Chen R, Durr NJ

IEEE Transactions in Medical Imaging 37:12 2018

Publisher’s Link | arXiv

Abstract

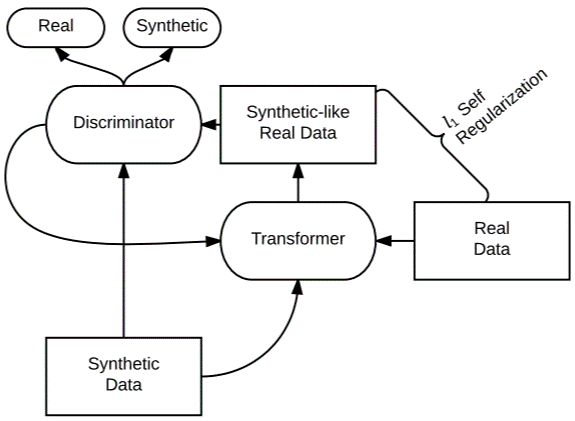

To realize the full potential of deep learning for medical imaging, large annotated datasets are required for training. Such datasets are difficult to acquire due to privacy issues, lack of experts available for annotation, underrepresentation of rare conditions and poor standardization. The lack of annotated data has been addressed in conventional vision applications using synthetic images refined via unsupervised adversarial training to look like real images. However, this approach is difficult to extend to general medical imaging because of the complex and diverse set of features found in real human tissues. We propose a novel framework that uses a reverse flow, where adversarial training is used to make real medical images more like synthetic images, and clinically-relevant features are preserved via selfregularization. These domain-adapted synthetic-like images can then be accurately interpreted by networks trained on large datasets of synthetic medical images. We implement this approach on the notoriously difficult task of depth-estimation from monocular endoscopy which has a variety of applications in colonoscopy, robotic surgery and invasive endoscopic procedures. We train a depth estimator on a large dataset of synthetic images generated using an accurate forward model of an endoscope and an anatomically-realistic colon. Our analysis demonstrates that the structural similarity of endoscopy depth estimation in a real pig colon predicted from a network trained solely on synthetic data improved by 78.7% by using reverse domain adaptation.